Hello,

I’m facing an issue with an alerting rule that is giving false positives when metrics increase right near the evaluation boundary. Here are the details:

Alerting Rule Query:

(

(sum(increase(metrics_listener_objects_count_total{appid=“app_id”,ListenerName=“listener_name”}[3h])) < 1)

or

(absent_over_time(metrics_listener_objects_count_total{appid=“app_id”,ListenerName=“listener_name”}[3h]))

)

and (sum(container_memory_usage_bytes{container=“processor”}) > 0)

and hour() == 7 and minute() == 0

Setup:

- The rule runs once daily at 3 AM EST (7 AM UTC ).

- It checks the last 3 hours of data.

- Alerting Criteria: alert only if there has been no ingestion (no increase) during that time.

Problem:

- I am getting false alerts when ingestion happens just after the evaluation boundary.

- For example, in one case, data was ingested at 12:01, but the alert fired anyway because

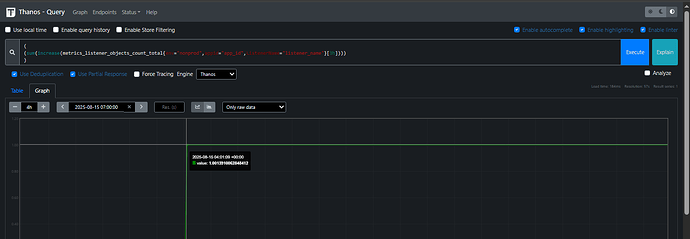

increase()evaluated over[12:01–03:00]seemed to return zero. - When I checked in Thanos directly, I could see that ingestion clearly happened in the window around 12:01 AM EST.

Questions:

- Is this a known limitation of how

increase()samples counters at the evaluation boundary? - Could query alignment / evaluation interval be causing this (e.g., ingestion at 12:01 but the evaluation missed it)?

- How can I handle the case where a metric was absent at the start of the evaluation period but later became present(data got ingested)?

Withincrease(), this still shows0, but in reality ingestion started and I don’t want an alert.

Is there a way I can tackle this edge case?

Check Below screenshots for ref (Timezone is UTC) :

Increase function response :